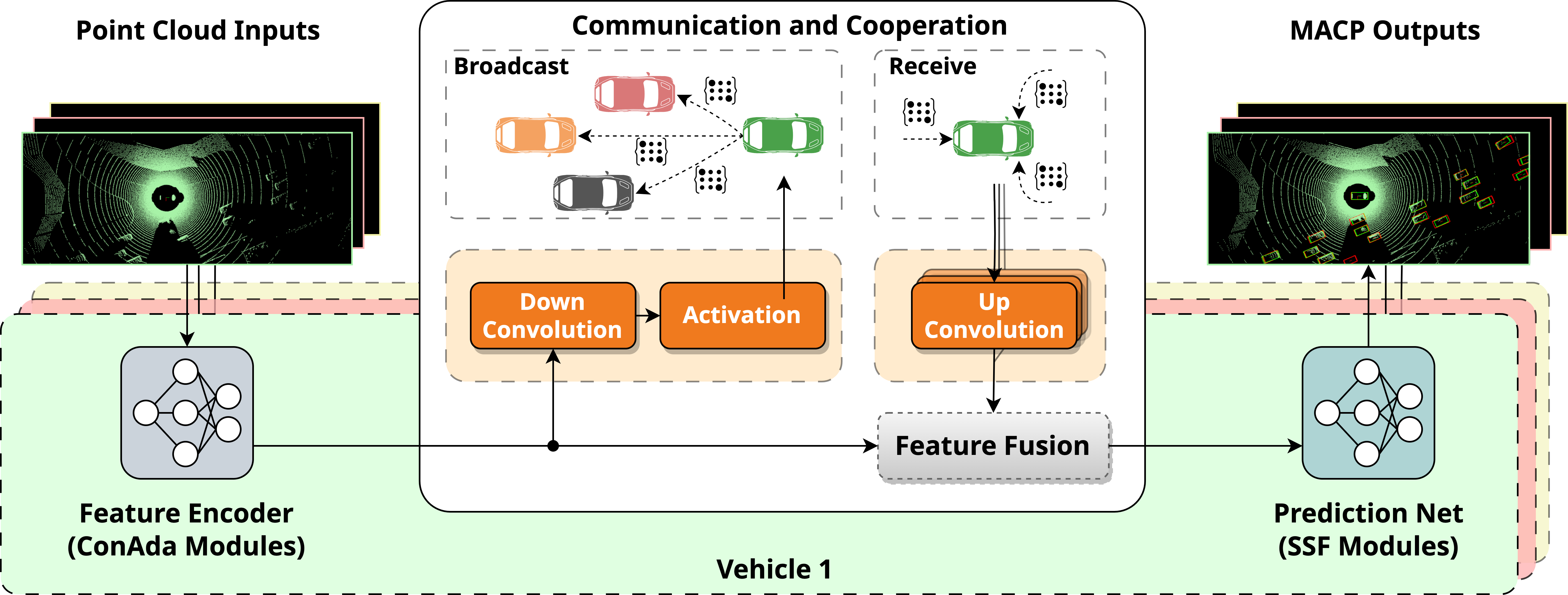

Vehicle-to-vehicle (V2V) communications have greatly enhanced the perception capabilities of connected and automated vehicles (CAVs) by enabling information sharing to "see through the occlusions", resulting in significant performance improvements. However, developing and training complex multi-agent perception models from scratch can be expensive and unnecessary when existing single-agent models show remarkable generalization capabilities. In this paper, we propose a new framework termed MACP, which equips a single-agent pre-trained model with cooperation capabilities. We approach this objective by identifying the key challenges of shifting from single-agent to cooperative settings, adapting the model by freezing most of its parameters, and adding a few lightweight modules. We demonstrate in our experiments that the proposed framework can effectively utilize cooperative observations and outperform other state-of-the-art approaches in both simulated and real-world cooperative perception benchmarks, while requiring substantially fewer tunable parameters with reduced communication costs.

| Method | Param (M) | AP@IoU=50/70 (↑) | AM (↓) (MB) |

||||

|---|---|---|---|---|---|---|---|

| Total | Trainable | Overall | 0-30m | 30-50m | 50-100m | ||

| No Fusion | 6.58 | 6.58 | 39.8/22.0 | 69.2/42.6 | 29.3/14.4 | 4.8/1.6 | 0 |

| Late Fusion | 6.58 | 6.58 | 55.0/26.7 | 73.5/36.8 | 43.7/22.2 | 36.2/17.3 | 0.003 |

| Early Fusion | 6.58 | 6.58 | 59.7/32.1 | 76.1/46.3 | 42.5/20.8 | 47.6/21.1 | 0.96 |

| F-Cooper | 7.27 | 7.27 | 60.7/31.8 | 80.8/46.9 | 45.6/23.6 | 32.8/13.4 | 0.20 |

| V2VNet | 14.61 | 14.61 | 64.5/34.3 | 80.6/51.4 | 52.6/26.6 | 42.6/14.6 | 0.20 |

| AttFuse | 6.58 | 6.58 | 64.7/33.6 | 79.8/44.1 | 53.1/29.3 | 43.6/19.3 | 0.20 |

| V2X-ViT | 13.45 | 13.45 | 64.9/36.9 | 82.0/55.3 | 51.7/26.6 | 43.2/16.2 | 0.20 |

| CoBEVT | 10.51 | 10.51 | 66.5/36.0 | 82.3/51.1 | 52.1/28.2 | 49.1/19.5 | 0.20 |

| MACP (Ours) | 8.94 | 1.97 | 67.6/47.9 | 83.7/62.1 | 58.4/38.5 | 34.6/23.1 | 0.13 |

| Method | Param (M) | AP@IoU=50/70 (↑) | ||

|---|---|---|---|---|

| Total | Trainable | Default Towns | Culver City | |

| No Fusion | 6.58 | 6.58 | 67.9/60.2 | 55.7/47.1 |

| Late Fusion | 6.58 | 6.58 | 85.8/79.1 | 79.9/66.8 |

| Early Fusion | 6.58 | 6.58 | 89.1/80.0 | 82.9/69.6 |

| F-Cooper | 7.27 | 7.27 | 88.7/79.0 | 84.6/72.8 |

| V2VNet | 14.61 | 14.61 | 89.7/82.2 | 86.0/73.4 |

| AttFuse | 6.58 | 6.58 | 90.8/81.5 | 85.4/73.5 |

| V2X-ViT | 13.45 | 13.45 | 89.1/82.6 | 87.3/73.7 |

| CoBEVT | 10.51 | 10.51 | 91.4/86.1 | 85.9/77.2 |

| AdaFusion | 7.27 | 7.27 | 91.6/85.6 | 88.1/79.0 |

| MACP (Ours) | 8.98 | 2.00 | 93.7/90.3 | 91.4/80.7 |

@inproceedings{ma2024macp,

title={MACP: Efficient Model Adaptation for Cooperative Perception},

author={Ma, Yunsheng and Lu, Juanwu and Cui, Can and Zhao, Sicheng and Cao, Xu and Ye, Wenqian and Wang, Ziran},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

pages={3373--3382},

year={2024}

}