A Survey on Multimodal Large Language Models for Autonomous Driving

Can Cui*, Yunsheng Ma*, Xu Cao*, Wenqian Ye*, Yang Zhou, Kaizhao Liang, Jintai Chen,

Juanwu Lu, Zichong Yang, Kuei-Da Liao, Erlong Li, Kun Tang, Zhipeng Cao, Tong Zhou,

Ao Liu, Xinrui Yan, Shuqi Mei, Jianguo Cao, Ziran Wang and Chao Zheng

This survey is the first survey of investigating the current efforts, key challenges, and

opportunities in

applying Multimodal Large Language Models (MLLMs) to driving systems.

Key Features:

1. We first introduce the background of MLLMs, the multimodal models development using LLMs, and

the history of autonomous driving.

2. We overview existing MLLM tools for driving, transportation, and map systems together with

existing datasets.

3. We summarize the works in The 1st WACV Workshop on Large Language and Vision Models for

Autonomous Driving.

4. We also discuss several important problems regarding using MLLMs in autonomous driving

systems that need to be solved by both academia and industry.

Recent Advancements in MLLMs

The chronological development of autonomous driving technology

The chronological development of autonomous driving technology

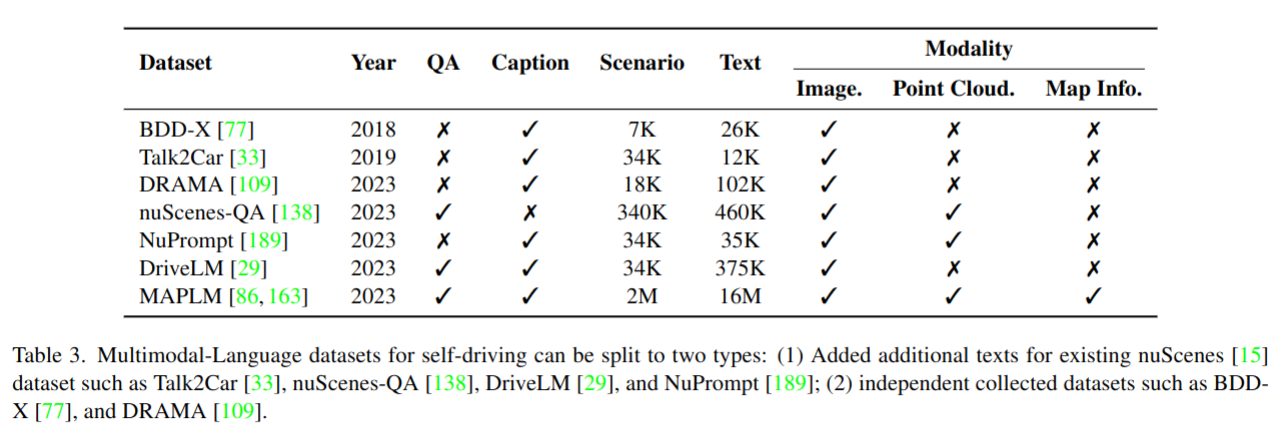

Multimodal-Language datasets for autonomous driving technology

|

|

|

|

|

| Can Cui Purdue University |

Yunsheng Ma Purdue University |

Xu Cao PediaMed AI & UIUC |

Wenqian Ye PediaMed AI & UVA |

Ziran Wang Purdue University |

| Chao Zheng Tencent |