Our work focuses on pioneering research at the intersection of LLMs, VLMs and autonomous

driving.

We're investigating how advanced language understanding can improve vehicle decision-making and

human-vehicle interaction, thereby enhancing safety and efficiency in autonomous systems. Our

goal

is to push the boundaries of AI in automotive technology and lead the way in developing smarter,

safer, and more intuitive autonomous vehicles for the future.

Our paper

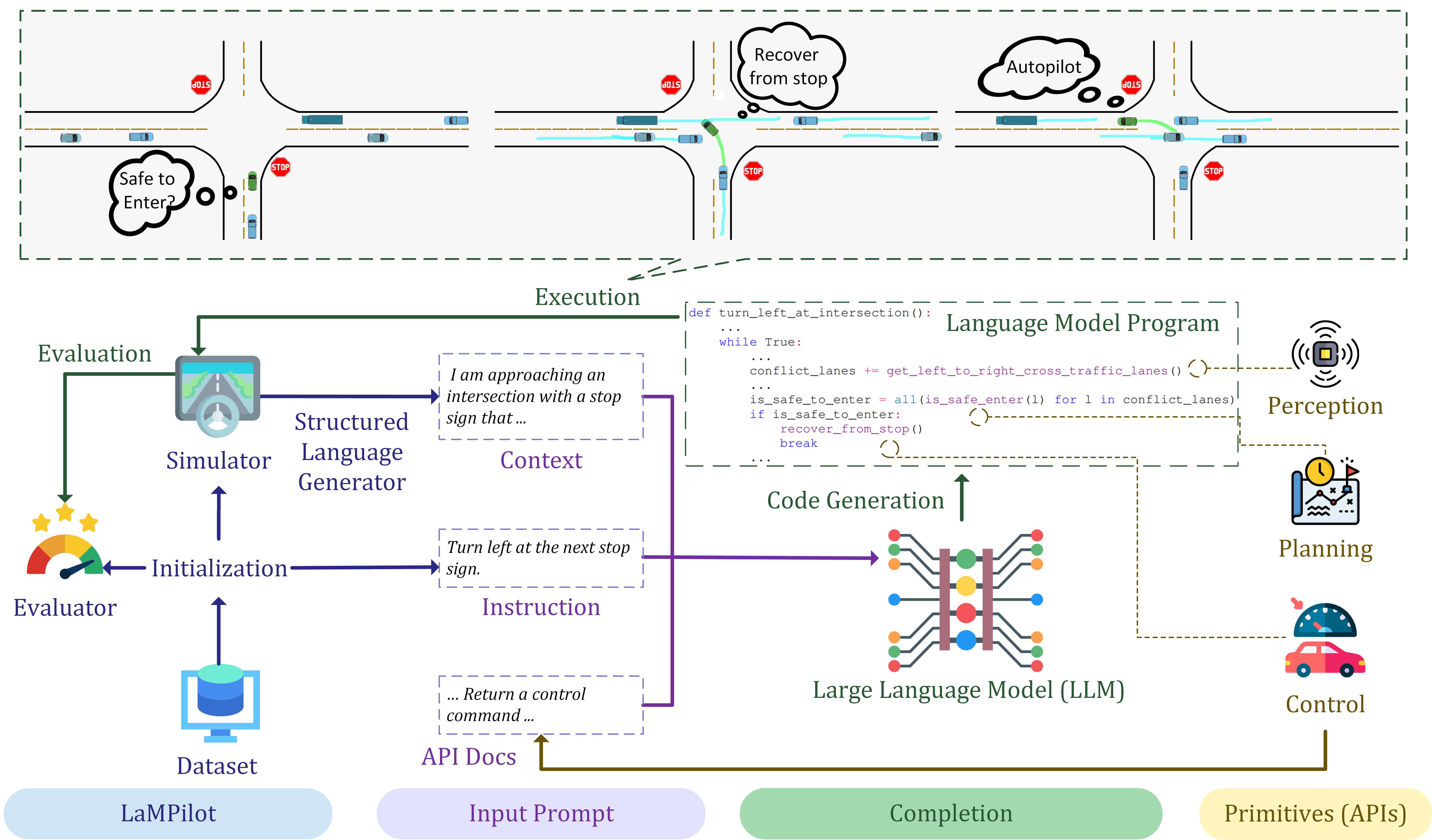

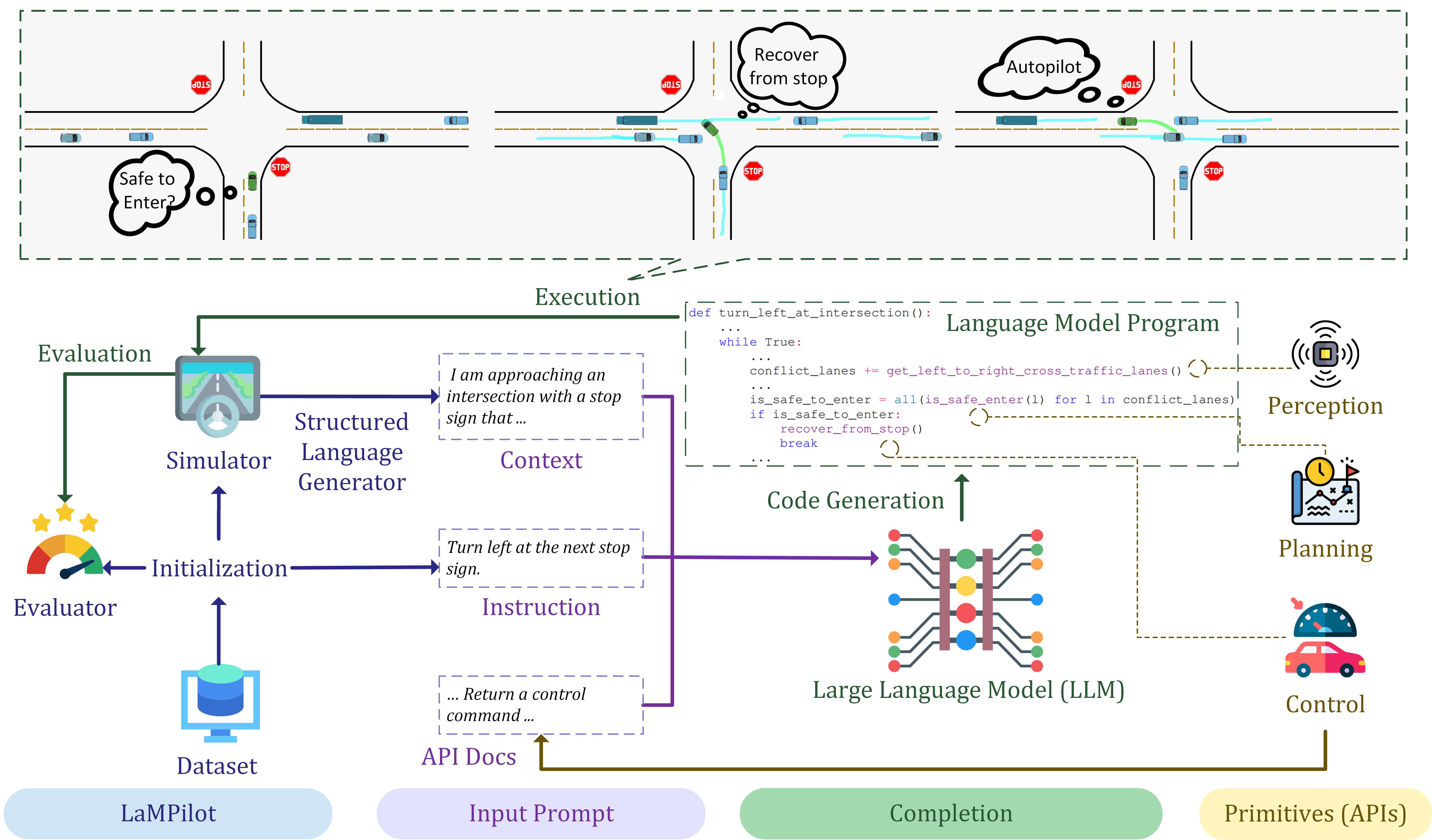

"LamPilot: An open benchmark dataset for autonomous driving with language model programs" was

accepted by CVPR 2024!

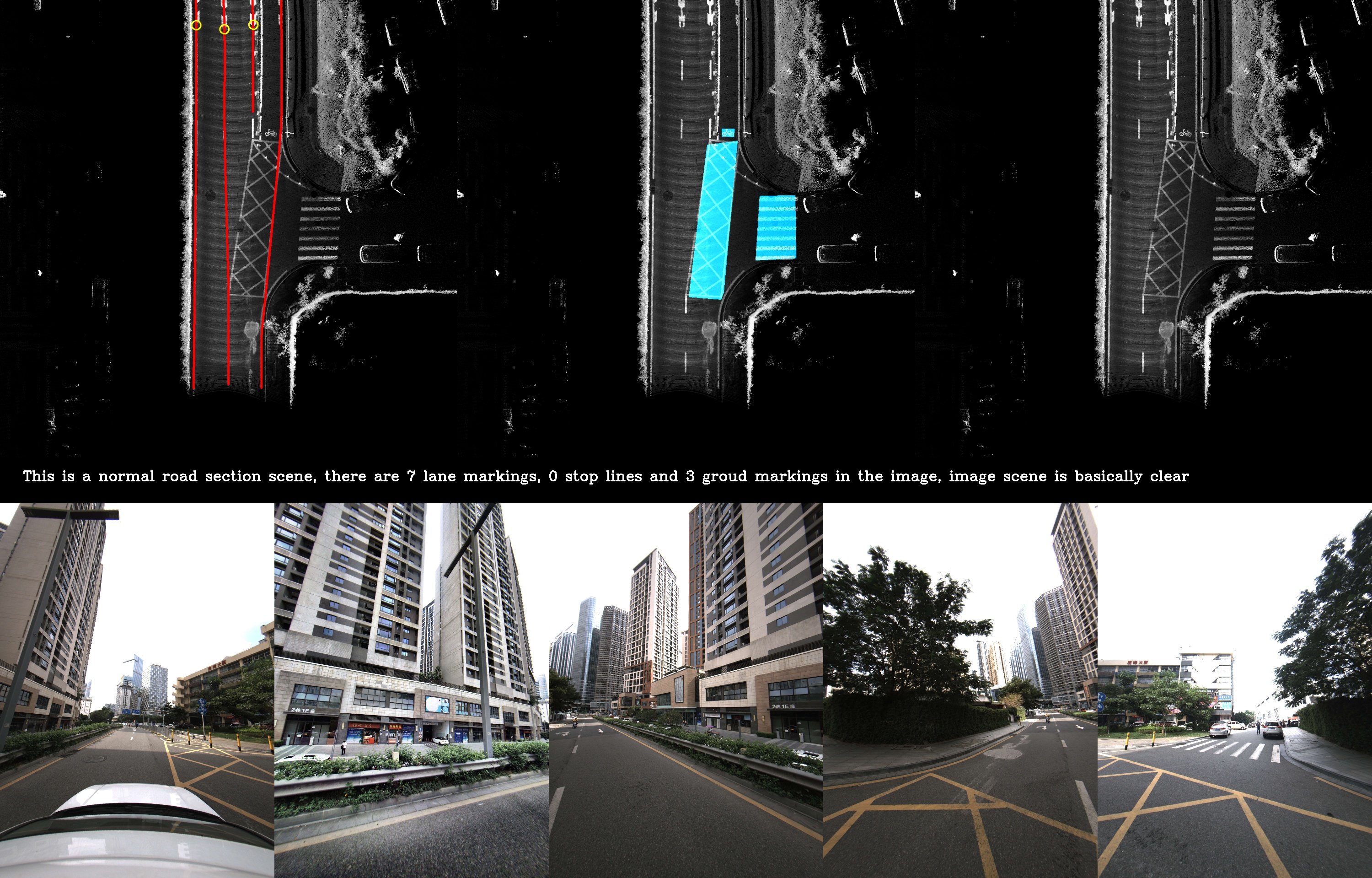

Our paper

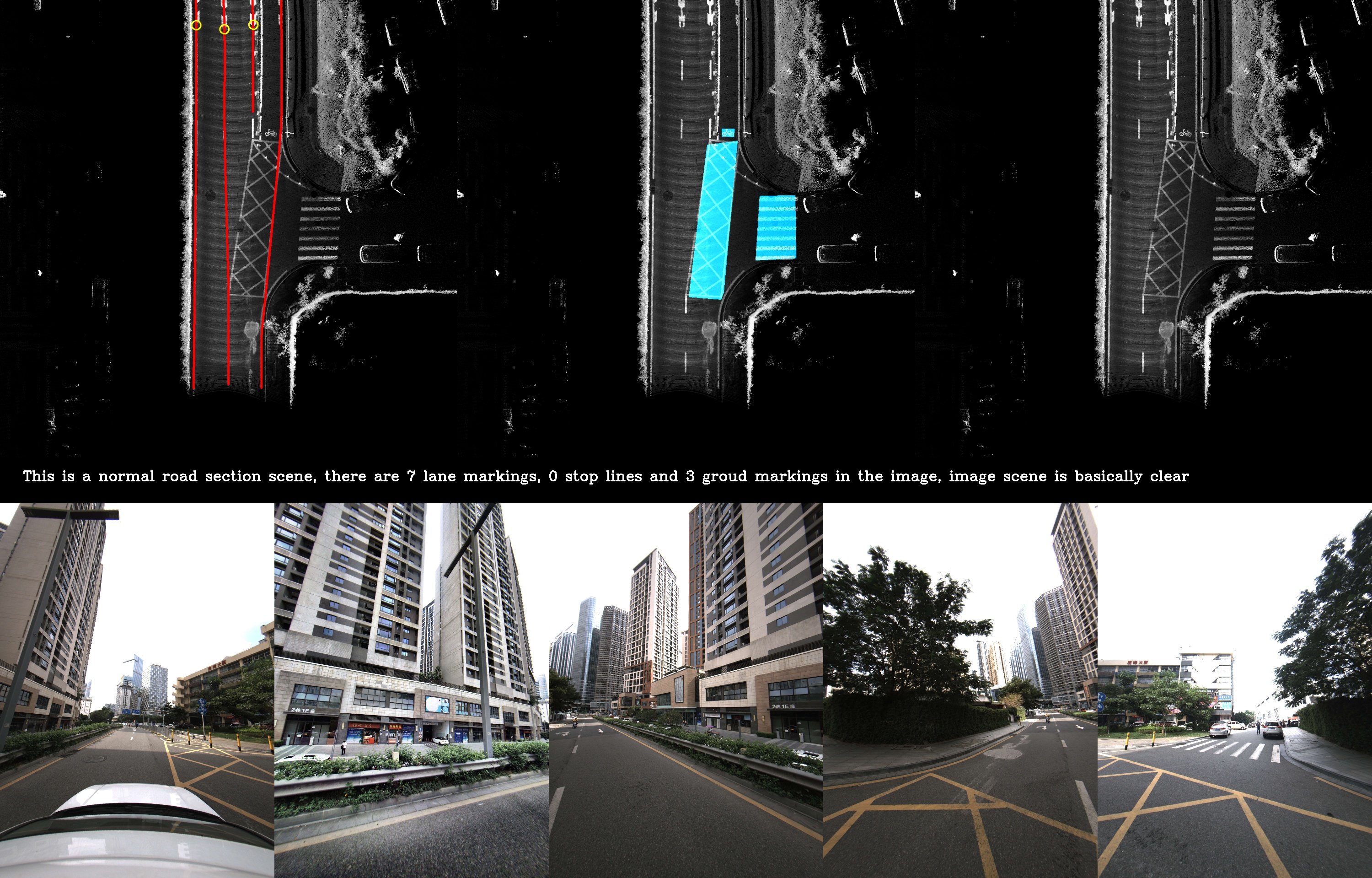

"MAPLM: A Real-World Large-Scale Vision-Language Dataset for Map and Traffic Scene

Understanding" was accepted by CVPR 2024!

.png)

We released the featured video of our Talk2Drive framework. This is a condensed video for the

previous parking, intersection and highway scenarios, providing an overview of the features

within our Talk2Drive framework.

We successfully used our Talk2Drive framework in the parking scenario, and new demos were

available now!

We successfully used our Talk2Drive framework in the highway scenario, and new demos were

available now!

We successfully used our Talk2Drive framework in the intersection scenario, and new demos were

available now!

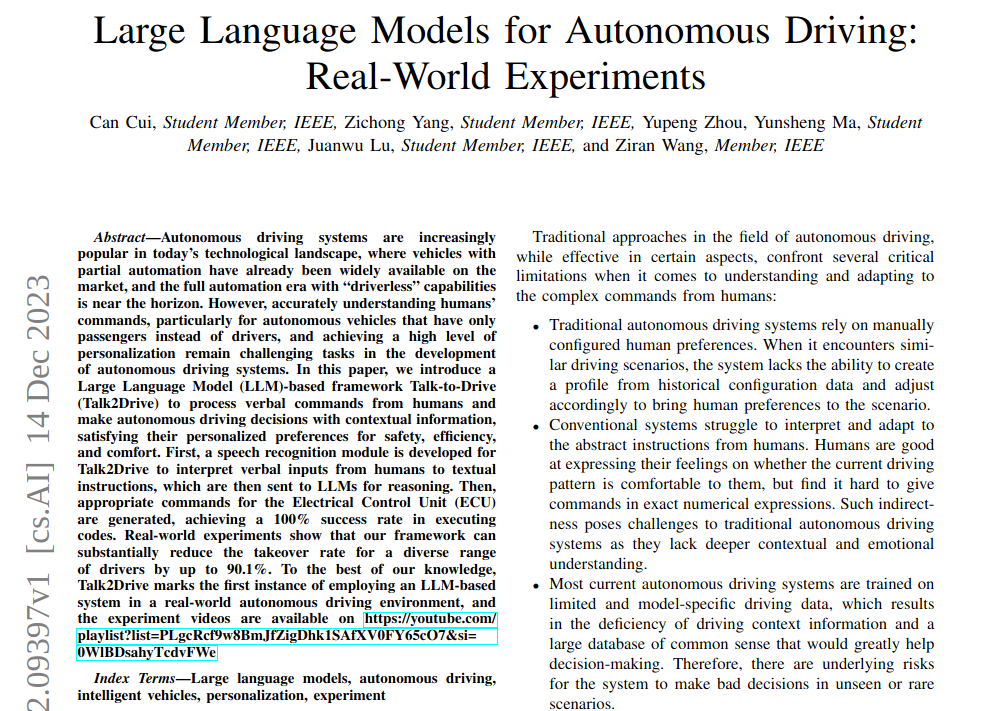

Our new paper "Large Language Models for Autonomous Driving: Real-World Experiments" about

Talk2Drive was available now!

Our new benchmark paper

"LaMPilot: An Open Benchmark Dataset for Autonomous Driving with Language Model Programs" was

avaliable now!

We successfully tested our Talk2Drive framework on a real vehicle in a closed parking lot.

Our new paper

"A Survey on Multimodal Large Language Models for Autonomous Driving" was accepted by WACV 2024!

Our new paper

"Drive as You Speak: Enabling Human-Like Interaction with Large Language Models in Autonomous

Driving" was accepted by WACV 2024!

Our new paper

"Receive, Reason, and React: Drive as You Say with Large Language Models in Autonomous Vehicles"

was avaliable now!

.png)